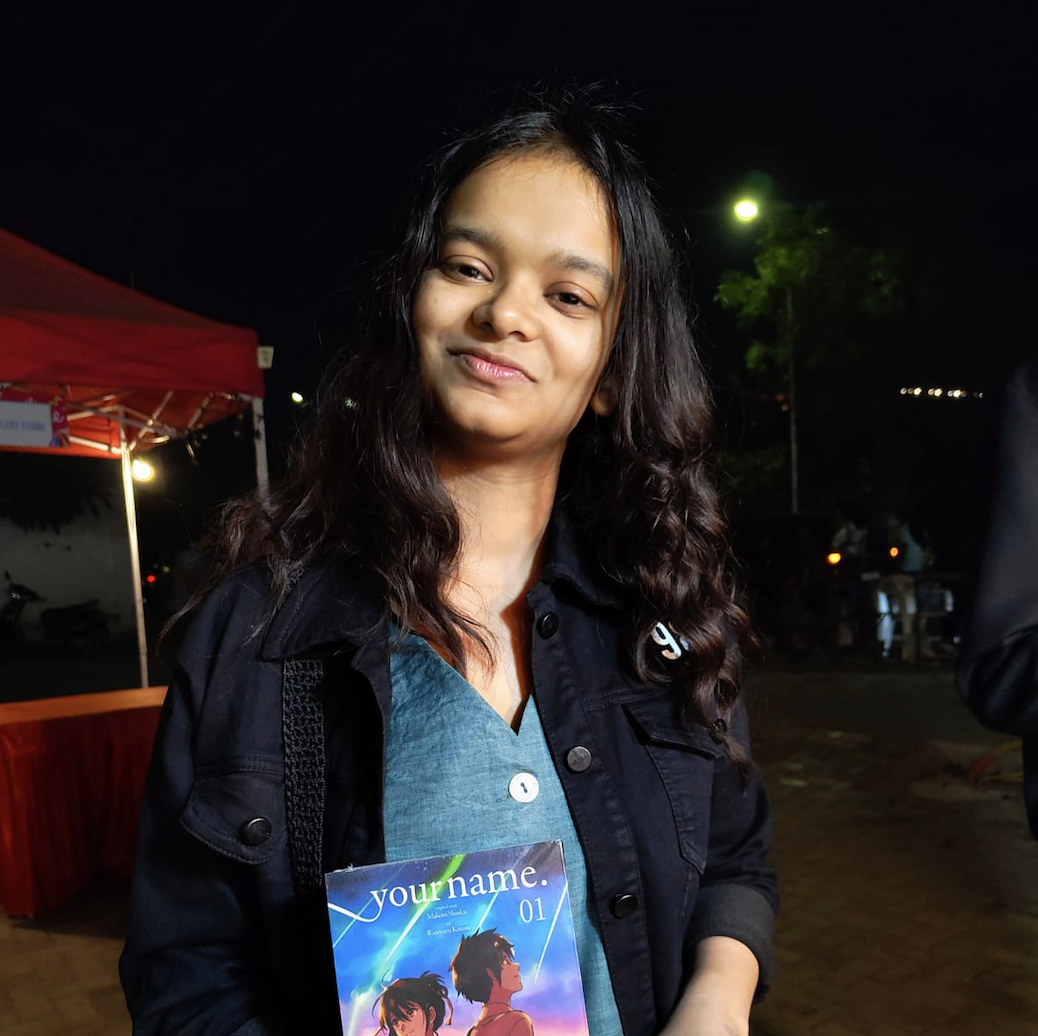

Diksha Shrivastava

I’m an independent AI Safety Researcher based out of India, broadly interested in Scalable Oversight, Natural Abstractions and Scientist AI. My answer to Hamming’s Question is designing truth-seeking agents which discover the causal abstractions in an open-ended world in an external, interpretable and intervenable way. I’m currently working on this agenda with Lossfunk, and supporting the development of an AI Safety ecosystem in India. I’ve previously shipped two products, completed some fellowships, recently graduated, and attended FAEB — the Finnish iteration of ARENA. These days, I spend most of my time thinking about the threat models arising from Open-Endedness and red-teaming research agendas to work with Scalable Oversight.If you’re a student of Indian origin interested in safety research, and an amount of around 20k (or less) could make a difference for you, please reach out to me.

Note: I’m not active on social media — the best way to reach me is by email.

I’m open to AI Safety Residencies beginning Spring 2026.